Exploring AWS Serverless Deployments with CDK v2: From RSS to X Posts - Part 2 of the Odyssey

In this blog post, we’ll continue our exploration of AWS Serverless deployments with CDK v2 by focusing on Lambda functions.

We’ll explore how to create and integrate these functions into our architecture along with a crucial step of granting permissions to resources that are deployed within the stack.

Lambda

The first Lambda function that we will create will periodically query an RSS feed, process the data and store the data in DynamoDB.

To get started with Lambda we will use the Amazon Lambda Python Library, this will provide constructs for Python Lambda functions. This will require Docker to be installed and running.

Modify the requirements file for the stack as below:

Next we will create a directory for our first Lambda function:

1 | mkdir lambda_rss_ddb_func |

Lets create a lambda_handler.py file, this file will contain our code that performs the magic:

1 | code lambda_handler.py |

The next file that we will create will be the requirements file for all our Python dependencies:

1 | code requirements.txt |

We will only be using the requests library in the Lambda function, so make sure to include requests in the newly created requirements.txt.

Time to write some code in the lambda_handler.py file, the code extracts post id, post title and link from a website feed, we will be using the feed from Hypebeast, once we have extracted the data we need, the data will be inserted into our DynamoDB Table.

Below is the code that you can populate your lambda_handler.py file:

Once you have modified your stack to include the construct to create a Lambda function, make sure to change the directory in your terminal to the root folder of the project.

Let’s turn our attention back to our stack to define the Lambda construct, add the below code:

Amazon EventBridge rule

To run the lambda function on a schedule, we can make use of an Amazon EventBridge rule that will periodically run our Lambda function. Add the below construct and permissions to the stack:

Another Lambda function

In a few moments time we will create another directory for our second lambda function that is invoked from new records(s) being added to our DynamoDB Table and creates a post on X with the post title and post link.

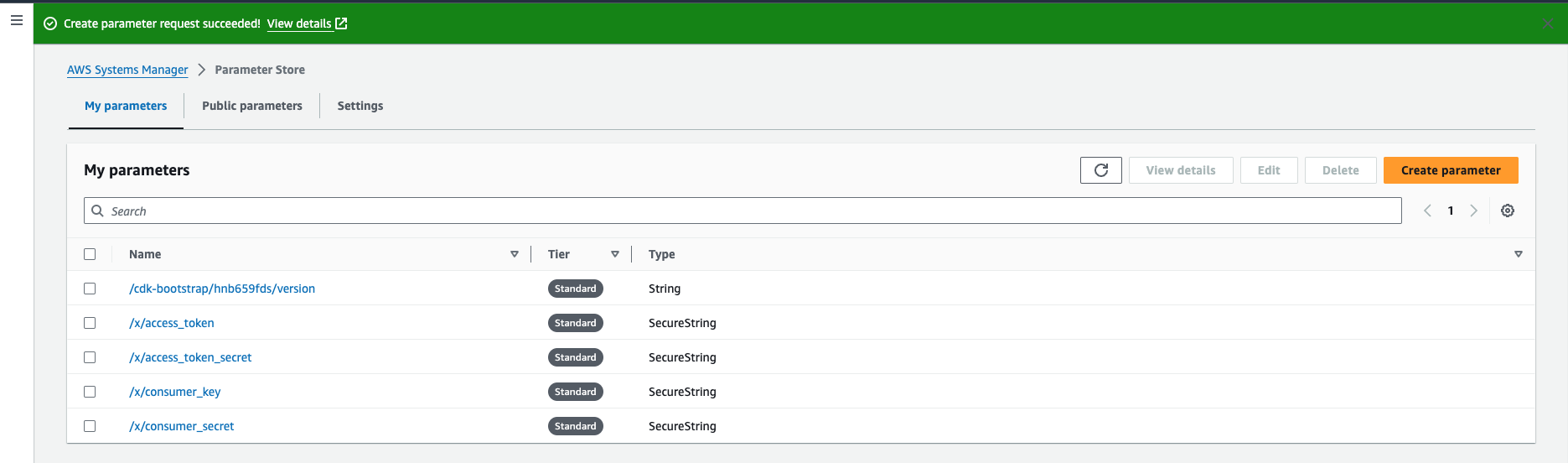

SSM Parameter Store

To access the X API, you’ll need to create an X Developer account, I’ve included the link in the resources section of this post.

We will need X credentials (consumer key, consumer secret, access token & access token secret).

These credentials need to stored somewhere securely, the SSM Parameter Store is a service that’s free and will fulfill our next requirement well.

- Nagivate towards the Parameter Store under AWS Systems Manager in the AWS Management Console.

- Select the Create parameter button.

- In the Name textbox, enter /x/consumer_key, select SecureString under Type and paste your consumer_key in the Value textbox.

- Repeat the above process for the remaining credentials (consumer secret, access token & access token secret).

X API stuff out the way, let’s create that directory:

1 | mkdir lambda_x_share_func |

Within this directory create another lambda_handler_py file and a requirements.txt file.

Below is the code you should insert in the newly created lambda_handler_py file in the lambda_x_share_func directory:

You’ll need to specify a region when initializing the ssm_client, early on I noticed that Lambda was unable to access the values in the Parameter Store, this was strange as all the documentation I read seemed to indicate that the Lambda function should have been able to access the Parameter Store in the same region.

In the requirements.txt file make sure to include tweepy, that’s the library that we will use in our Lambda function to interact programmatically with X.

Navigate back to the stack, we wil now create a construct for the LambdaShareFunc, add the below code:

In order for our Lambda function to read the Parameter Store values in SSM we can create a SSM Policy statement, this will grant the Lambda function permissions to retrieve the secrets.

We need to allow our Lambda function to act when new items are added to our DynamoDB table, this can be achieved using DynamoDB Streams, lets add another construct.

One last code addition to our stack is to enable DynamoDB Streams on our Table. This is achieved by adding stream=dynamodb.StreamViewType.NEW_IMAGE in the table construct:

We’re almost on the final stretch, ensure that Docker is running.

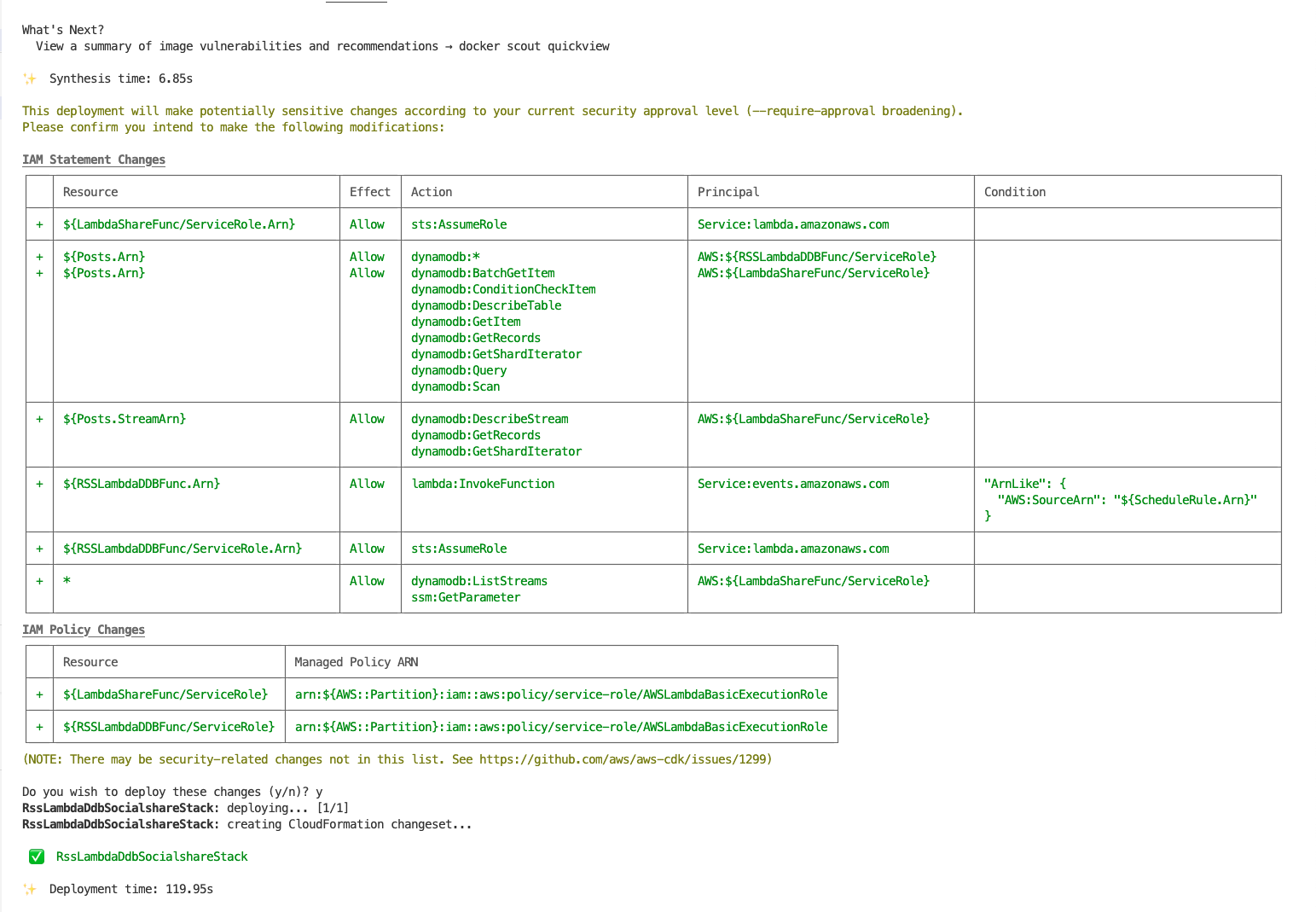

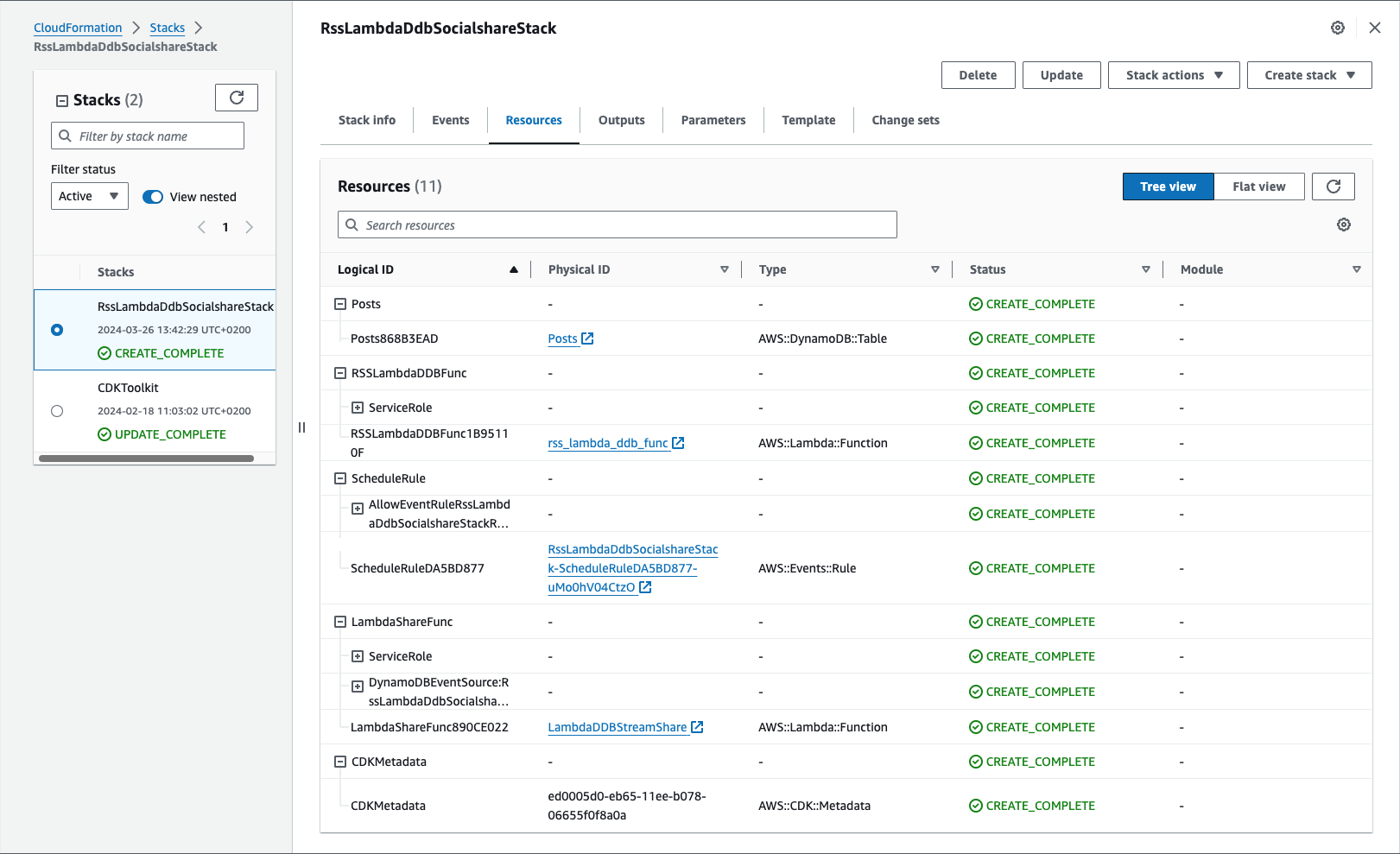

We can now deploy the stack using cdk deploy, this will take a few moments.

Below are screenshots from my terminal window and AWS Management Console of the successful deployment:

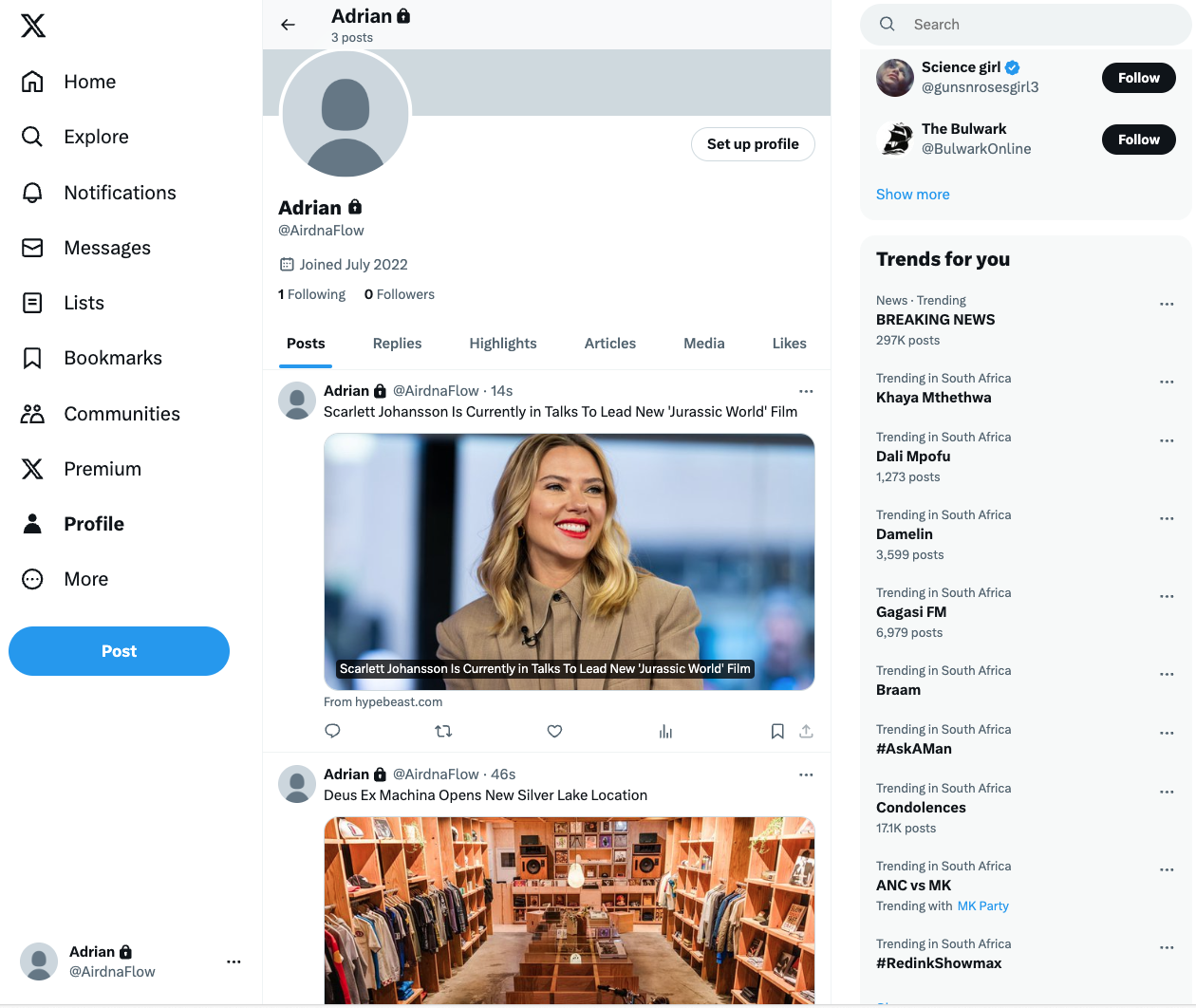

I’ll navigate towards an X burner account I created a couple of years ago for testing the X API, below are screenshots with Posts created:

Conclusion

In this blog post, we’ve delved into the intricacies of integrating Lambda functions, adding permissions to newly created constructs and enabling a DynamoDB stream trigger that invokes a Lambda Function to create a Post on X into our AWS serverless architecture deployments using CDK v2.

In an upcoming blog post we will shift our focus on testing constructs and lambda functions locally.

Resources

Exploring AWS Serverless Deployments with CDK v2: From RSS to X Posts - Part 2 of the Odyssey